I have been working with a system where one server with clients connected has to send heartbeat messages to another server on behalf of the clients. The heartbeat messages are sent at a 10 minute interval for each client, which means that if the server is restarted and all of the clients reconnect in short order, the heartbeat messages are all sent within a small interval. This isn't a particular problem in and of itself, but it annoys me a little that the monitoring graph is so spiky. Over time the clients naturally disconnect and reconnect which means the heartbeat rate smooths out, but it takes ages.

Can I do better? This post details my idle evening attempts to smooth out the message rate. I have one rule to keep to, although it's not too critical, which is to keep as close to the 600 second heartbeat interval as possible.

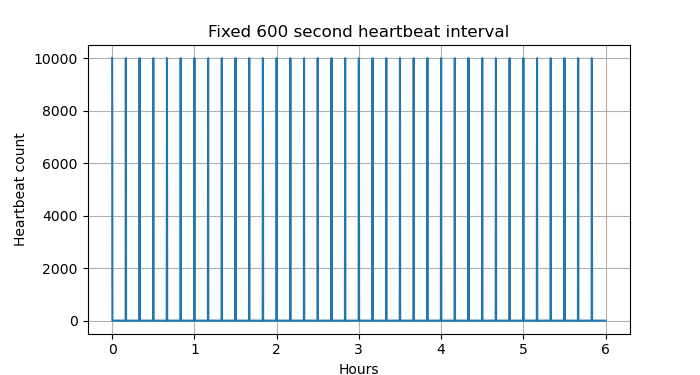

Fixed 600 second interval

For completeness, this is my simulated starting point - 10,000 clients all connecting at time 0 and being assigned a fixed 600 second heartbeat per client, leading to a 10,000 high spike every 600 seconds.

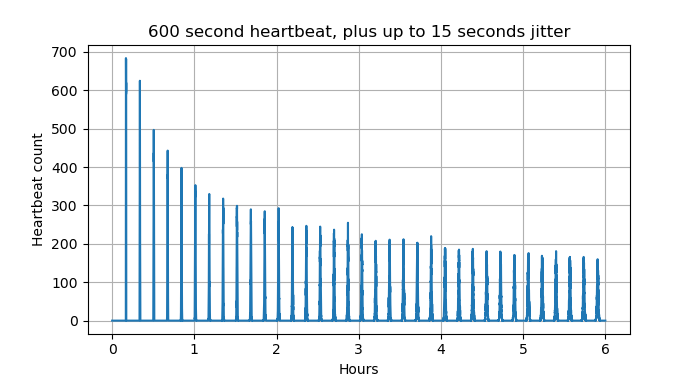

Adding jitter

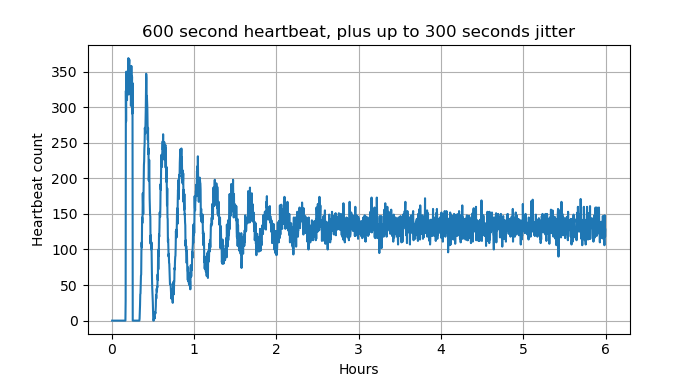

A classic approach is to add some random jitter, i.e. adding a random value on top of the fixed interval each time a new heartbeat interval is calculated. This gradually spreads out the clients, depending on the amount of jitter used.

Keeping the jitter low does bring down the peak values a good amount, but still takes ages to have any real effect. This is for jitter in the interval of 0-15 seconds (up to 2.5% of the full range):

Even after 6 hours it's still looking very spikey. I omitted the initial 10,000 high spike which would really mess with the scale.

Using even 300 seconds of jitter it still takes more than 2 hours to truely settle down, and we're potentially up at 15 minute heartbeat message intervals. In practice that would probably be ok, but it doesn't feel right.

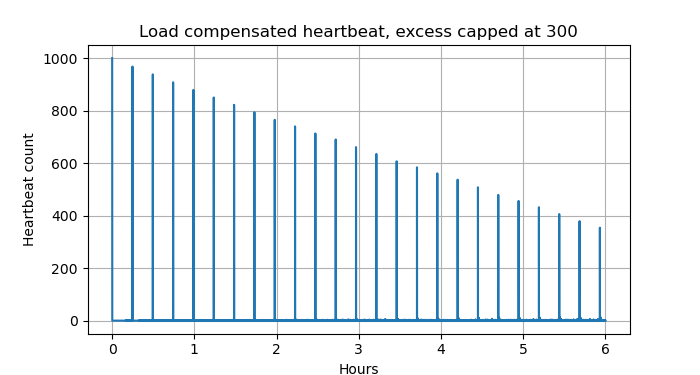

Accounting for the current load

My next thought was to take account of the recent rate of heartbeat messages and use that to modify what the next heartbeat interval would be. The idea being that if we have a high load right now, that implies that in 600 seconds there would consequently also be a high load, so we should push the next heartbeat interval out a bit further. This is nice, because we should only need to use longer intervals when there is a peak load. Once everything is smooth, the heartbeats should be constant. I thought I'd use an exponential load equation to calulate the current load, because I've used it before and it's easy to tweak.

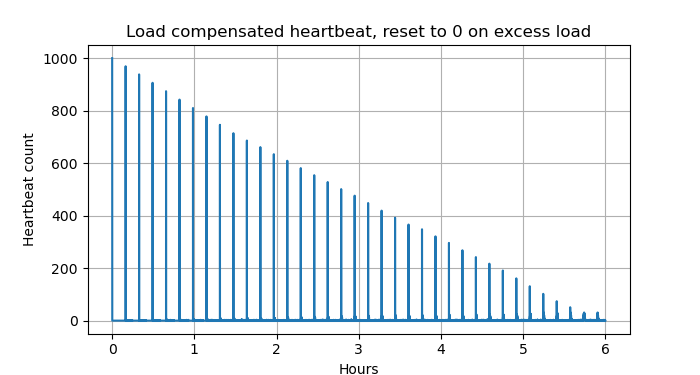

Unfortunately this is again limited by the fact that I don't want to mess with the heartbeat interval too much, meaning that I don't have much scope for modifying each client, and hence can only slowly make changes. This next result shows what happens with one method I tried, where the calculated load is added on as extra heartbeat interval, capped at 300 seconds - too high for my liking, but still producing a too slow result.

Interestingly, if instead of capping at 300 I reset the extra heartbeat interval to 0 when load is too high, the response is much quicker, although still too slow given that this only shows 1000 clients.

All in all, I don't like this approach, it feels like something trying to be too clever.

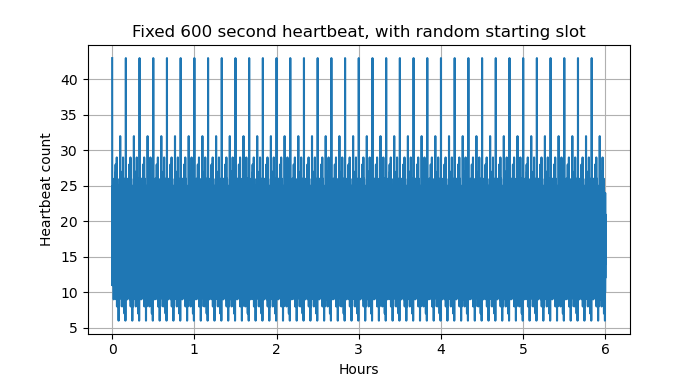

Random slot based approach

Whilst walking our dog, it came to me that I'd been thinking about this all wrong. With a heartbeat interval of 10 minutes I have 600 different slots that I want to put clients in, leading to a perfect result being 10000/600 = 16.67 clients per slot. If I can get the clients into the right slot in the first place, then I don't need to mess with the subsequent heartbeat intervals.

That leads to this result which is much better, but still not perfect.

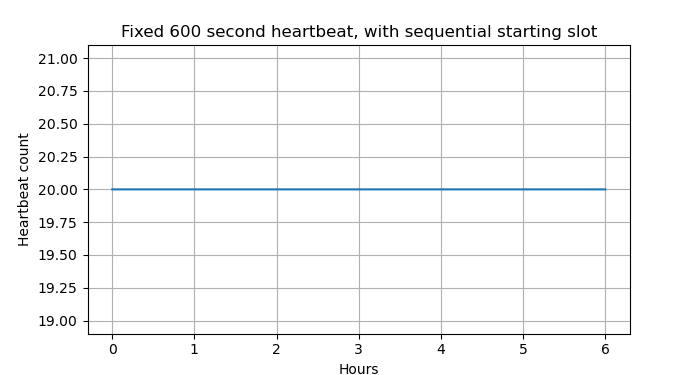

Sequential slot allocation

If I keep track of what slot I've most recently allocated a client to, I can allocate the next client to the next slot. Easy peasy, why didn't I think of this earlier?

I've cheated a little for this graph, using a total of 12,000 clients so all of the slots are equally full.

So that's it, all sorted. Well, not quite. This is where my extremely simple

simulation hides a lot of the complexity that matters. I've connected all of my

clients at t=0 and assigned their next heartbeat time to be value mod 600,

with value incrementing by one each time. That works because all of the

clients are connecting in the same second, but doesn't work otherwise. If I

take this same naive approach when connecting a random number of clients

between 0 and 200 per second, we get this result.

What's happening is that I'm using the next slot number to allocate a slot for each client, but without referencing it to an absolute time. In other words, the first slot 0 doesn't necessarily match the next slot 0, and they should.

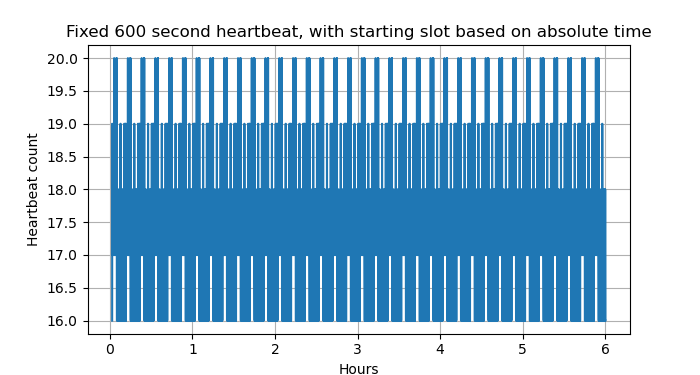

To fix this, we can allocate the slots so that slot 0 is always absolute time

mod 600, slot 1 is always (absolute time mod 600) plus 1, and so on. Result

below:

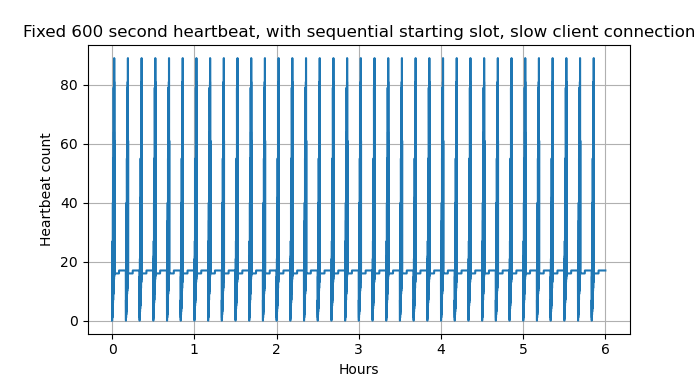

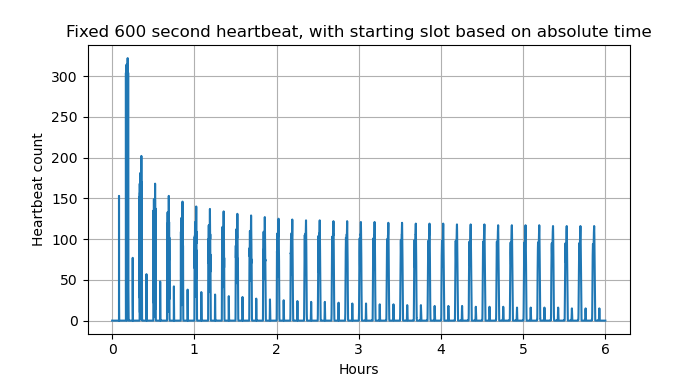

There is one last thing to consider. it's not really important for the key point of this post, but does show the importance of getting the model right.

The current real implementation uses a linked list to check when heartbeats are due. When a client connects, it is by definition the last client that needs to send a heartbeat, and is added to the end of the list. This results in a naturally sorted list. When the heartbeat check is made, we only iterate over the part of the list where clients need to send a heartbeat. The client is then removed from the front of the list and added back to the back, keeping it sorted.

The simulations I've done keeps the list sorted at all times, which doesn't match what would happens if the next heartbeat time is allocated based on slot, but the client is still added to the back of the list. If we do that, the list is no longer sorted and sometimes clients that should have a heartbeat sent get missed, because they are in the wrong place in the list. Then advancing through the list we get to clients that are overdue a heartbeat, and that manifests as a spiky response.

Fixing this requires the clients to be added to the list in order which is an O(n) operation, but is only done on the client connection so is not as bad as it could be.